Many beginners in data analysis often fall into common pitfalls that can derail their efforts. By being aware of these critical errors and knowing how to avoid them, you can improve the accuracy and meaningfulness of your data insights. Here are 10 common mistakes you should be mindful of in your data analysis journey.

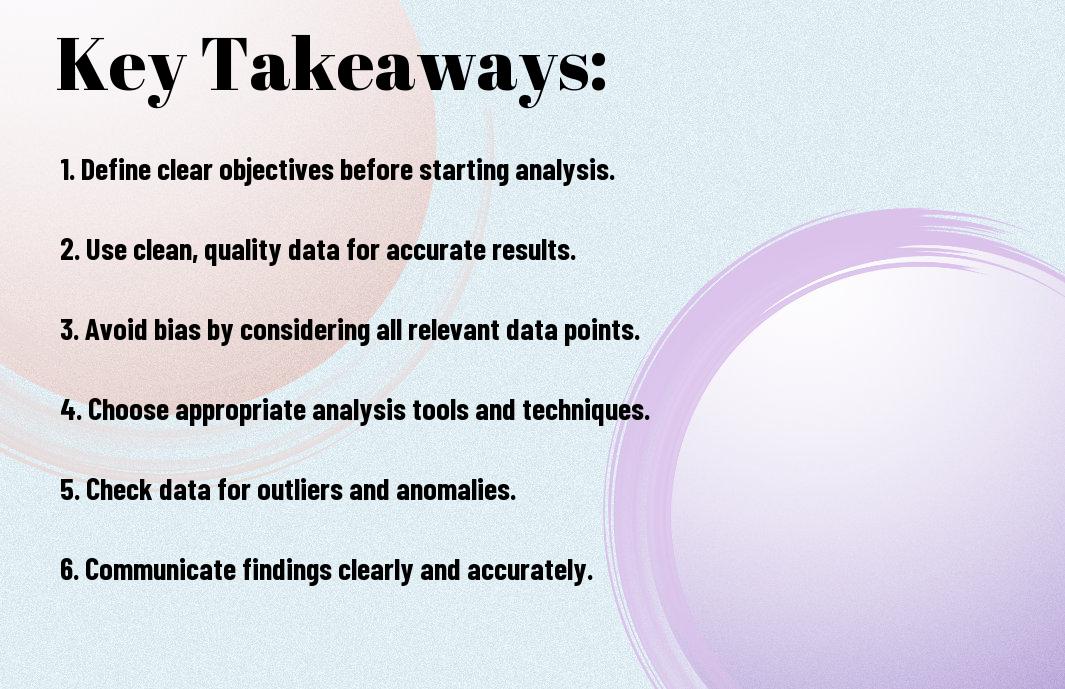

Key Takeaways:

- Define Your Goal: Clearly define the objective of your analysis before proceeding, to avoid getting lost in the data.

- Understand Your Data: Thoroughly explore and clean your data to ensure accurate analysis results.

- Avoid Overfitting: Do not create a model that is too complex for the data, as it may lead to misleading conclusions.

- Use Appropriate Visualizations: Choose the right type of graphs or charts that effectively represent your data insights.

- Validate Your Findings: Always double-check your analysis results to ensure their accuracy and reliability.

- Communicate Clearly: Present your data analysis in a simple and understandable manner for better comprehension.

- Be Mindful of Biases: Acknowledge and address any biases that may influence your data analysis process or results.

Ignoring Data Quality

Inconsistent formatting

The most common mistake you can make in data analysis is to ignore inconsistent formatting within your dataset. Any variations in how data is entered or displayed can lead to errors in your analysis and produce misleading results. For example, dates entered in different formats (MM/DD/YYYY vs. DD/MM/YYYY) can lead to incorrect conclusions if not standardized.

To avoid this mistake, you should always clean and preprocess your data before beginning any analysis. Make sure all data fields are formatted consistently and follow the same conventions. This will ensure accurate results and save you time in the long run.

Bear in mind, the devil is in the details when it comes to data analysis. Taking the time to standardize formatting may seem tedious, but it is a crucial step in ensuring the quality and reliability of your findings.

Missing values

The presence of missing values in your dataset is another critical aspect of data quality that you should never ignore. Missing data can skew your analysis and lead to incomplete or inaccurate insights. Any statistical analysis or visualization based on incomplete data can be misleading and potentially harmful to your decision-making process.

When you encounter missing values in your dataset, it is imperative to handle them properly. You can choose to either impute missing values based on existing data or exclude them from your analysis, depending on the context. Just make sure to document and justify your approach in your data analysis process.

Ignoring missing values can have serious consequences, so make sure to address them proactively in your analysis. By handling missing data correctly, you can ensure the validity and reliability of your conclusions.

Failing to Clean Data

Even Statistics Stumbles: 10 Common Mistakes in Data Analysis begin with failing to clean your data properly. This step is crucial because the quality of your analysis and results heavily relies on the cleanliness of your data. If you overlook this step, you are likely to encounter inaccuracies, inconsistencies, and errors in your analysis.

Outlier detection

One common mistake in data analysis is failing to properly detect and handle outliers. These are data points that deviate significantly from the rest of the dataset. Ignoring outliers can skew your analysis and lead to incorrect conclusions. It’s important to identify outliers using statistical methods and decide whether to remove them or address them in a meaningful way.

Handling duplicates

For a more accurate analysis, you must address and remove duplicate entries in your dataset. Duplicates can occur due to data entry errors, system bugs, or merging multiple datasets. Failure to handle duplicates can inflate certain metrics and lead to misleading insights. Therefore, before plunging into your analysis, ensure that you have eliminated any duplicate records to maintain the integrity of your data.

One common technique for handling duplicates is to use unique identifiers (such as a unique ID) to identify and remove duplicate entries. By deduplicating your data, you can prevent double counting and ensure that each data point is unique and contributes meaningfully to your analysis.

Outlier detection and handling duplicates are important steps in data cleaning that can significantly impact the quality of your analysis. By paying attention to these aspects and implementing appropriate strategies to clean your data effectively, you can ensure more reliable and accurate results in your data analysis projects. Do not forget, the devil is in the details when it comes to data analysis, and thorough data cleaning is the foundation for robust and trustworthy insights.

Misinterpreting Correlation

Unlike 10 Common Mistakes That Every Data Analyst Make, misinterpreting correlation is a critical error that can lead to flawed conclusions in data analysis. Correlation does not imply causation. It merely indicates a relationship between two variables, showing how they change together. However, it doesn’t prove that one variable causes the other to change.

Causation vs correlation

You’ll often encounter situations where two variables are correlated, but that doesn’t mean that one variable is causing the other to change. It is imperative to remember that correlation does not necessarily imply causation. To establish causation, you would need to conduct further research, experiments, or use advanced statistical techniques. Without proper evidence, assuming a causal relationship based on correlation alone can lead to erroneous conclusions and misguided decisions.

Confounding variables

One common mistake in interpreting correlations is overlooking confounding variables. Confounding variables are external factors that can influence both the dependent and independent variables, leading to a spurious correlation. For example, in a study examining the correlation between ice cream consumption and sunburns, the confounding variable could be sun exposure. Failing to account for confounding variables can result in misleading interpretations of the relationship between the variables of interest.

When analyzing correlations between variables, it’s crucial to consider all possible confounding variables that could be influencing the results. By controlling for confounding variables through experimental design or statistical methods, you can ensure that the observed correlation is more likely to reflect a true relationship between the variables of interest. This approach strengthens the validity of your analysis and helps you draw accurate conclusions based on the data.

Inadequate Sampling

After The Top 8 Data Analysis Mistakes To Avoid, inadequate sampling is a critical error that can significantly impact the outcomes of your data analysis. Sampling involves selecting a subset of data from a larger population to infer conclusions about the entire group. If your sample is not representative or if it is too small, your analysis results may not accurately reflect reality. Avoiding inadequate sampling practices is crucial for producing reliable and valid insights from your data.

Sample size issues

The sample size plays a crucial role in the accuracy and generalizability of your data analysis results. If your sample size is too small, you may not capture the variability present in the population, leading to skewed or inaccurate conclusions. On the other hand, if your sample size is too large, you might waste time and resources analyzing unnecessary data points. Finding the right balance is crucial, and calculating the appropriate sample size based on statistical techniques can help you ensure the reliability of your analysis.

Biased sampling methods

With respect to sampling, the methods you use can introduce bias into your analysis. Biased sampling methods can lead to systematic errors in your results, impacting the validity of your findings. Common types of bias include selection bias, where certain groups are overrepresented or underrepresented in the sample, and non-response bias, where participants who choose not to participate differ systematically from those who do. It’s crucial to be aware of these biases and take steps to minimize their impact on your analysis.

The way you select your sample can have a significant impact on the reliability and validity of your data analysis. Plus, understanding and addressing potential biases in your sampling methods is crucial for ensuring that your results accurately reflect the population you are studying. By using random sampling techniques, stratified sampling, or other methods to reduce bias, you can increase the trustworthiness of your findings and make more informed decisions based on your data.

Overfitting Models

All too often, when plunging into data analysis, overfitting models can be a common pitfall that many analysts fall into. This occurs when a model is excessively complex, capturing noise in the data rather than the underlying relationships. The result is a model that performs incredibly well on the data it was trained on but fails to generalize to new, unseen data. This can lead to misleading conclusions and poor decision-making based on the analysis.

Model complexity

On model complexity, it’s important to find the right balance. While you want your model to have enough complexity to capture the underlying patterns in the data, you also want to avoid going overboard. A model that is too complex will fit the noise in the data, leading to overfitting. On the other hand, a model that is too simple may not capture all the important features in the data, leading to underfitting. Finding the sweet spot in terms of model complexity is crucial in building a reliable and robust analysis.

Regularization techniques

While regularization techniques may sound complex, they are powerful tools in combating overfitting. These techniques introduce a penalty term to the model’s loss function, discouraging the coefficients from reaching excessively high values. This helps to prevent the model from fitting the noise in the data, improving its generalization performance. Common regularization techniques include L1 (Lasso) and L2 (Ridge) regularization, which add penalties based on the absolute and squared values of the coefficients, respectively.

Regularization is a crucial step in model training to ensure that your model does not overfit the data. By incorporating these techniques into your analysis, you can improve the robustness and reliability of your models. Keep in mind, the goal of data analysis is not just to fit the data you have but to make accurate predictions on new data as well. So, make sure to keep regularization in mind to avoid falling into the trap of overfitting models.

Misusing Statistical Tests

To ensure the accuracy and reliability of your data analysis, it is crucial to avoid misusing statistical tests. This common mistake can lead to incorrect conclusions and ultimately impact the decisions and actions based on the analysis results. By understanding the proper use of statistical tests and avoiding these pitfalls, you can enhance the quality of your data analysis.

Hypothesis testing

Assuming the null hypothesis is true without considering the alternative hypothesis can be a critical mistake in hypothesis testing. Not properly defining your null and alternative hypotheses can result in drawing inaccurate conclusions from your data. It is imperative to clearly state the hypotheses before conducting the test to ensure that you are testing the right question. Additionally, misinterpreting p-values can also lead to errors in hypothesis testing. Remember that the p-value is not the probability of the null hypothesis being true; rather, it indicates the probability of obtaining the observed data if the null hypothesis is true.

Test assumptions

Even the most robust statistical tests have assumptions that must be met for the results to be valid. Ignoring these assumptions can lead to biased and unreliable results. It is crucial to check the assumptions of the statistical test you are using and ensure that your data meets these requirements. Common assumptions include normality of data, homogeneity of variances, and independence of observations. Failure to meet these assumptions can invalidate the results of your analysis.

Test assumptions are not optional guidelines; they are fundamental requirements that need to be fulfilled for the statistical test to be valid. If your data violates the assumptions of the test, you may need to explore alternative methods or consider transforming your data to meet the requirements. By addressing these assumptions upfront, you can ensure the reliability and validity of your data analysis results.

Failing to Validate Models

Despite having a well-developed model, failing to validate it properly can lead to serious consequences in your data analysis process. Validation is crucial to ensure that your model performs well on unseen data and generalizes accurately to make reliable predictions. By overlooking this step, you risk deploying a flawed model that may provide misleading results and hinder your decision-making.

Model evaluation metrics

When validating your model, it is vital to utilize appropriate evaluation metrics to assess its performance. Common metrics such as accuracy, precision, recall, and F1-score can provide valuable insights into how well your model is predicting outcomes. However, it’s crucial to select the metrics that align with your specific objectives, as using the wrong ones can lead to misinterpretation of your model’s effectiveness.

Cross-validation methods

Another critical aspect of model validation is employing suitable cross-validation methods to ensure the robustness of your model. Techniques like k-fold cross-validation help assess the model’s performance across different subsets of data, reducing the risk of overfitting and providing a more accurate estimation of its capabilities. By incorporating cross-validation into your validation process, you can increase the reliability of your model and make more informed decisions based on its results.

Utilizing cross-validation methods also allows you to detect any potential issues with your model’s generalization and address them before deployment. This approach enhances the overall quality of your analysis and boosts the credibility of your findings, ultimately leading to more reliable insights and outcomes.

Failing to validate your models properly can undermine the entire data analysis process and diminish the trustworthiness of your results. By neglecting crucial steps like selecting appropriate evaluation metrics and utilizing effective cross-validation methods, you put your analysis at risk of inaccuracies and biases. Take the time to validate your models thoroughly to ensure that they are robust, reliable, and capable of providing valuable insights for your decision-making processes.

Ignoring Contextual Factors

Not considering contextual factors in data analysis can lead to inaccurate conclusions and flawed insights. Contextual factors refer to the external influences and domain knowledge that can greatly impact the interpretation of data. By overlooking these crucial aspects, you risk making decisions based on incomplete information.

External influences

You’ll find that external influences such as economic conditions, cultural norms, or political events can significantly impact the data you are analyzing. These factors can introduce bias or confounding variables that distort the true relationships within the data. Therefore, it is imperative to consider these external influences and incorporate them into your analysis to ensure the accuracy and reliability of your findings.

Domain knowledge

Contextual factors also include your domain knowledge or expertise in the subject area you are analyzing. Without a deep understanding of the context in which the data was collected, you may misinterpret the results or overlook important patterns. Your domain knowledge allows you to ask the right questions, make informed assumptions, and contextualize the data appropriately for meaningful analysis.

Factors such as external influences and domain knowledge play a critical role in data analysis. By acknowledging the impact of these contextual factors and incorporating them into your analysis, you can ensure that your conclusions are accurate and actionable. Bear in mind, data analysis is not just about crunching numbers; it’s about understanding the story behind the data to make informed decisions.

Final Words

Ultimately, mastering the art of data analysis is crucial in today’s data-driven world. By avoiding common mistakes such as overlooking outliers, using improper visualization techniques, or ignoring the importance of data cleaning, you can enhance the accuracy and reliability of your analysis. It is crucial to familiarize yourself with the best practices and tools available to streamline your data analysis process and ensure that your insights are robust and actionable.

Bear in mind, each step in the data analysis process plays a vital role in the eventual outcome. Pay attention to your data quality, take the time to understand the context of your analysis, and always question your assumptions. By being mindful of these common pitfalls and continuously seeking to improve your skills, you can elevate your data analysis capabilities and make more informed decisions based on data-driven insights.

Don’t let these common mistakes hinder your data analysis efforts. Stay vigilant, stay curious, and always strive for precision in your analysis. With practice and a proactive approach to learning from your mistakes, you can become a proficient data analyst who adds value to your organization and drives strategic decision-making. Keep these lessons in mind as you launch on your data analysis journey, and remember that each mistake is an opportunity to grow and refine your analytical skills.